Starting an Azure Cloud Shell

Introduction

You will use Azure Cloud Shell to inspect source code and issue Azure CLI commands in this Lab. This Lab Step prepares Cloud Shell for use. Azure Cloud Shell is a shell that runs in your web browser. Cloud Shell comes with several tools installed that are useful for managing resources in Azure, such as the Azure CLI. You can choose between a Linux-style Bash shell or a PowerShell experience. You can switch between the two shell experiences at any time. Cloud Shell is backed by an Azure file share that persists a clouddrive home directory. The shell runs on a temporary host that is free to use. You only pay for the required file share storage.

Some points worth noting are:

- You are a regular user without the ability to obtain root/administrator privileges.

- The cloud shell will timeout after 20 minutes of inactivity.

Neither of these limitations will be an issue for this Lab. In this Lab Step, you will start a Bash Cloud Shell using an existing storage account.

Instructions

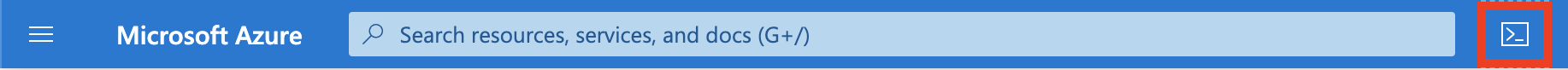

1. Click on the >_ Cloud Shell icon in the menu bar of the Azure Portal Dashboard:

This will open a Cloud Shell console at the bottom of your browser window.

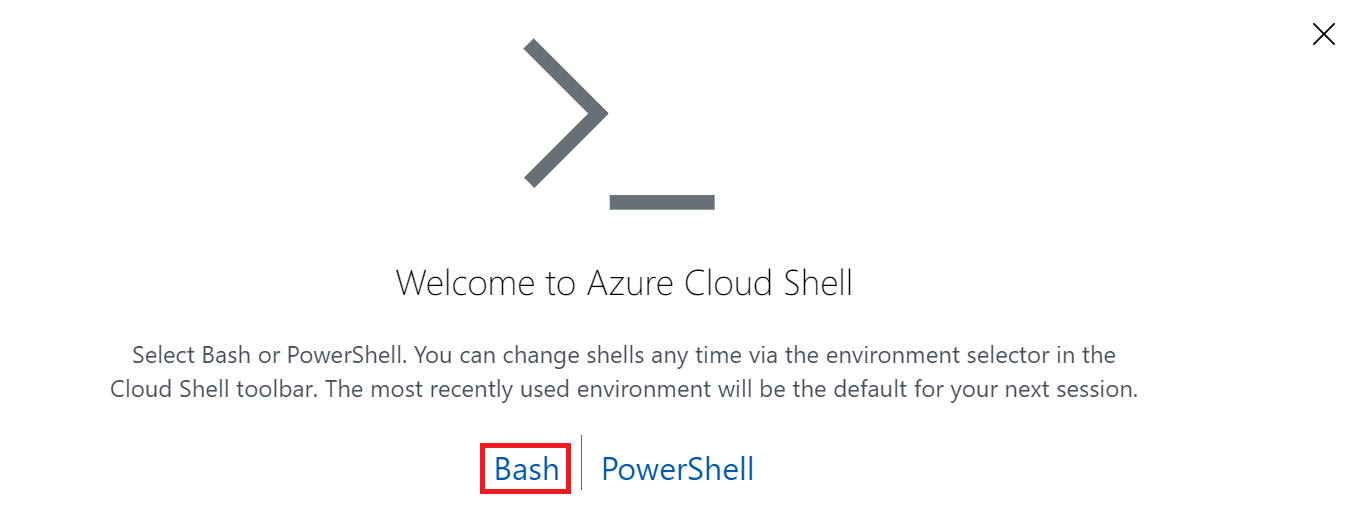

2. Select Bash for your shell experience:

3. Click on Show advanced settings to configure the shell to use existing storage resources:

4. Set the following values in the Cloud Shell storage configuration form, and then click Create storage:

- Subscription: Leave the default value

- Cloud Shell region: Choose the South Central US region (The Cloud Academy Lab environment created a storage account for you in this region.)

- Resource group: Use existing. Select the resource group beginning with cal-

- Storage account: Use existing. Select the only storage account available or the one beginning with cacloudshell if there are multiple

- File share: Create new. Enter cloudshell for the name of the file share

Warning: Make sure to select the region described in the instructions. The image above is only demonstrative.

Warning: If you still can't reach the storage account, make sure the deployment reached 100%. You can check it under Resource groups -> ca-lab-### -> Deployments.

The file share creation should complete within a few seconds, and your Cloud Shell will display progress as it initializes.

5. Wait until you see the prompt ending with $ indicating your Cloud Shell is ready to use:

Summary

In this Lab Step, you started a Cloud Shell from the Azure Portal. You configured the shell to use an existing storage account and created a new file share in the storage account to persist your clouddrive home directory.

Reviewing the Application You will Containerize

Introduction

This Lab begins with the source code of a client-side Javascript application. You will briefly review the application and how it will be containerized in this Lab Step. In following Lab Steps, you will push the image to an Azure Container Registry (ACR), deploy the containerized application in Azure Kubernetes Service (AKS), and access the application running in the Kubernetes cluster.

Instructions

1. In your Cloud Shell, enter the following commands to clone the application's source Git repository into the clouddrive:

Copy codegit clone https://github.com/cloudacademy/javascript-tetris.git clouddrive/javascript-tetris

cd clouddrive/javascript-tetris

The clouddrive directory persists across Cloud Shell sessions.

2. Open Cloud Shell's built-in code editor:

Copy codecode .

3. Expand the www directory to inspect its contents:

The application consists of an HTML file (index.html) that also contains inline Javascript code, as well as a Javascript stats library (stats.js) and a background texture image (texture.jpg). You can look through the contents of the files but an analysis of the code is outside the scope of this Lab.

4. Double-click Dockerfile to view its contents in the editor:

The Dockerfile lists the commands required to create a container image for the application:

- line 1: Start from the nginx:alpine image. Nginx is the web server used to serve the application. Alpine is a lightweight version of an Nginx image which will make the application image smaller in size.

- line 3: Copy the source files into the

/wwwdirectory in the image - line 4: Copy the included Nginx configuration (nginx.conf) into the image's default configuration path. This causes the container to load the given configuration by default.

- line 6: Expose port 8000 so that incoming connections on port 8000 are allowed. The provided Nginx configuration causes the server to listen on port 8000.

- line 8: Set the container start up command to write log messages to standard output and start Nginx. The daemon off string is required to start Nginx in a container.

5. Close the code editor by clicking the ellipsis (...) in the upper-right corner followed by Close Editor:

Azure Cloud Shell itself utilizes a Docker container to run. Therefore Cloud Shell cannot run a Docker daemon (although technically possible to run Docker in a container, Cloud Shell's container is not started in privileged mode for security reasons and therefore cannot use the hosts Docker socket). So to test the program you run a web server provided by a Node.js package rather than using a container. This is only a workaround for Azure Cloud Shell. In practice, you should use the same image for testing and production.

6. Server the application on port 8000 using a simple HTTP server provided by Node.js:

Copy codenpx http-server www -p 8000

The application is now running on port 8000 in Cloud Shell. You can access Cloud Shell's port 8000 by using the web preview feature of Cloud Shell.

7. Click the web preview icon with the a file and a magnifying glass and select Configure:

You can configure the port that the web preview feature connects to in Cloud Shell.

8. Enter 8000 as the port and click Open and browse:

This opens a new browser tab with the application running in it:

Feel free to try it out and confirm the application works.

7. Close the tab and press ctrl+c in Cloud Shell to stop the server:

Summary

In this Lab Step, you reviewed the application that you will containerize and deploy later in the Lab.

Creating an Azure Container Registry (ACR)

Introduction

Azure Container Registry (ACR) is a fully-managed Docker image registry. By using ACR, you get a Docker registry within Azure allowing you to minimize network latency and data transfer costs when deploying containers in Azure. You can also easily replicate the registry across regions. ACR is also integrated with Azure Active Directory making access control for your private registry similar to how you manage access to other Azure resources.

In this Lab Step, you will create an Azure Container Registry that you will use to publish the application's image making it available to deploy to Kubernetes later in the Lab. You will use the Portal rather than the Azure CLI because it is easier to explore what is available in ACR through the Portal.

Instructions

1. In the search bar at the top of the Azure Portal, enter container registry and click Container Registry under Marketplace:

2. In the Create container registry form, enter the following values leaving the defaults for the rest:

- Registry name: calabsregistry##### where you replace ##### with random digits (the registry name must be unique within Azure)

- Resource group: Select the available resource group from the drop-down menu. It begins with cal-. (This automatically sets the correct Location)

- Location: Leave the automatically selected location after selecting the Resource group

- SKU: Basic (Different SKUs vary in their performance and scale. Basic is sufficient for this Lab)

3. Click Review + create.

4. Click Go to resource in the Deployment succeeded notification:

From the Overview blade, you can see a quick summary of the registry including the Login Server URI and the Usage:

You can also see the other menu categories available, although some categories only apply to the premium SKU, such as Firewalls and virtual networks. One of the menu categories is Tasks. You will use a task to build the application container image and add it to the registry in the next Lab Step.

Summary

In this Lab Step, you created a Docker registry in Azure using Azure Container Registry. Note that you could achieve a similar result using the az acr create command in the Azure CLI.

Building a Container Image using ACR Tasks

Introduction

The common commands used for pushing Docker images to a Docker registry, namely:

docker build...docker tag...docker push...

can be used with Azure Container Registry as with any Docker registry. However, ACR also offers an additional approach that you will explore in this Lab Step using ACR Tasks. This method is useful in general but when working in Azure Cloud Shell it is the preferred way since it is not possible to run the Docker daemon. It would also be preferred wherever you don't need or want to have a full Docker installation running. As you will see ACR Tasks make use of standard Dockerfiles and use the standard docker commands with the benefit of the tasks running on Azure's managed infrastructure. You are charged a flat per-second fee while a container build task runs.

Instructions

1. In your Azure Cloud Shell, view the Azure CLI help for ACR Tasks:

Copy codeaz acr build --help

The az acr build command creates a quick task which is a simplified way of performing a container image build and push to ACR. There is also the az acr task command for more complex tasks. The quick task is all you need in this Lab. The examples at the bottom of the output illustrate how to perform common image build scenarios:

2. Enter the following commands from inside the clouddrive/javascript-tetris directory to start an ACR Task that builds the application's container image:

Copy code# Store ACR name

acr_name=$(az acr list --query [0].name -o tsv)

# Run an ACR quick task to build image and push to app/tetris repository in ACR

az acr build -t app/tetris:1.0 -r $acr_name .

The quick task begins by packaging the directory contents and uploading it for processing by an ACR build agent. The agent then runs the usual Docker commands and the logs are streamed to your Cloud Shell. Once the build succeeds the agent automatically pushes the image to ACR. Messages from the build agent appear in white color:

The output also shows any dependencies of the image. In this case, the library/nginx image is depended upon and it shows the image source is registry.hub.docker.com (Docker Hub registry).

3. In the Azure Portal, view the Tasks blade of your ACR registry:

Here you can find a record of all tasks executed in ACR including the logs.

4. Click Repositories in the menu to view the repository containing the built and pushed image:

You can view all of the tags corresponding to different versions of images by selecting a repository. There is only one tag (1.0) at the moment.

Summary

In this Lab Step, you used an ACR quick task to build a Docker image and automatically push it to an ACR container registry. The image is now available to consumers in Azure with ACR pull image authorization.

ACR Tasks can also be configured to automatically build images when you commit code, run tests or when base images are updated. You can read more here after completing the Lab.

Inspecting the Created AKS Cluster

Introduction

Azure Kubernetes Service (AKS) allows you to run fully-managed Kubernetes clusters in Azure. By using AKS you also automatically use Azure Active Directory for access control in the cluster. Because Kubernetes clusters leverage many resources in Azure, they take a substantial amount of time to create. To reduce the amount of time waiting, the Cloud Academy Lab environment created a cluster for you when you started the Lab. It takes around 18 minutes to completely create. You will first look at how to create an AKS cluster in the Portal to see the available configuration options. After, you will inspect the pre-created AKS cluster and ensure it is ready for use in this Lab Step.

Instructions

1. In the search bar at the top of the Azure Portal, enter aks and click Kubernetes services under Services:

2. For now, ignore the ca-labs-cluster and click + Add to simulate creating another cluster.

3. In the Create Kubernetes cluster blade, read through the descriptions and field tooltips (hover over the i icons) on the Basics, Node Pools, Authentication, Networking, and Monitoring tabs to learn more about AKS configuration options.

As an example, Virtual nodes are useful for quickly scaling your cluster when you don't have enough resource capacity on the nodes in your node pools:

Virtual nodes use Azure Container Instances (ACI) which provide serverless compute resources for running containers. Technically, the feature is enabled via the virtual kubelet which allows virtual nodes to appear as normal nodes to Kubernetes. ACIs can be used directly without AKS but for production-ready orchestration, AKS with ACI is preferred. Virtual nodes and other features may not be available in all regions. Virtual nodes are not in the scope of this Lab but you will become familiar with other AKS features as the Lab proceeds.

4. Close the Create Kuberentes cluster blade.

5. Click ca-labs-cluster in the Kubernetes services table:

This is the cluster created by the Lab environment.

6. Read the cluster specs on the Overview blade:

Note: You can safely ignore the The infrastructure resource group 'MC_cal-... error message. You do not have access to the resource group containing the underlying cluster infrastructure. It does not impact your ability to complete the lab.

The cluster has 2 (Standard B2s) which combine to provide 4 Total cores and 8 GB Total memory. The Kubernetes API server address is also given.

Note: The cluster Status should be Succeeded before moving on to following Lab Steps. If the Status is not Succeeded, you can continue through this Lab Step while and will wait for the cluster at the end.

7. Click Networking in the left sidebar to view details about the cluster networking.

You will see something similar to:

The HTTP application routing is enabled by the HTTP application routing addon that is configured in the Networking tab of the Create Kubernetes cluster blade. It creates a DNS zone and automatically created DNS records for accessing applications running in Kubernetes.

8. Click Node pools in the navigation menu and see the details of the cluster's single node pool:

The node pool has 2 nodes (NODE COUNT). It is possible to create multiple node pools and to scale node pools as your cluster requirements change. Besides this view of node pools, you don't have insight into the resources that Azure manages for you in AKS from this blade. However, AKS does allow users to see what resources are being used by AKS. AKS automatically creates a separate resource group for its managed resources. It is recommended not to modify the resources in the managed cluster resource group so the student Lab user does not have any access to it. The following instruction shows what is included in an example managed cluster resource group.

9. Observe the following screenshot of the resources in the managed cluster resource group that is automatically created whenever an AKS cluster is created (not accessible to the student user):

The AKS cluster includes:

- An Availablity Set to distribute nodes in the node pool across fault and upgrade domains to improve availability

- A Load balancer for balancing request to the highly available Kubernetes master that is fully managed by Azure and not directly accessible to users

- The DNS zone for AKS HTTP application routing is also shown.

10. Return to the Overview blade of the ca-labs-cluster AKS cluster and wait for the Status to reach Succeeded if it isn't already.

Summary

In this Lab Step, you explored AKS cluster configuration settings and inspected the AKS cluster the Cloud Academy Lab environment created for you.

Deploy the Application to AKS

Introduction

You will deploy the application on AKS in this Lab Step. You will perform the following tasks to deploy:

- Get credentials to authenticate

kubectlcommands sent to the Kubernetes cluster - Create a manifest file declaring the required Kubernetes resources

- Create the resources in the cluster

You will finish by confirming that you can access the application.

Instructions

1. In Azure Cloud Shell, get credentials for the Kubernetes cluster:

Copy code# Store the resource group's name group_name=$(az group list --query [0].name -o tsv) # Store the AKS cluster's name cluster_name=$(az aks list --query [0].name -o tsv) # Get credentials for cluster az aks get-credentials --name $cluster_name -g $group_name --admin

The credentials give you admin access to the cluster. In production, you would want to create a role with the minimal set of permissions required to perform the job, but this Lab will use admin. For an understanding of Kuberentes roles, try the Securing Kubernetes Clusters Lab on Cloud Academy. The credentials are downloaded to your user's ~/.kube/config file to automatically work with kubectl.

2. Confirm that the credentials grant you access to the cluster by listing the nodes in the cluster:

Copy codekubectl get pods --all-namespaces

The command successfully retrieves the Pods running in the cluster. Notice the following Pods and the functionality they provide:

- addon-http-application-routing-...: Support the HTTP application routing feature that is enabled for this cluster

- azure-cni-networkmonitor-...: Provides monitoring for the Azure CNI. Azure CNI allows Pods to use Azure virtual networks for container networking (Enabled by choosing advanced networking when creating a cluster)

- omsagent-...: Supports sending logs to a workspace in Azure Log Analytics (Enabled by choosing enable container monitoring when creating a cluster)

3. Create a Kubernetes deployment manifest file and open it in the code editor:

Copy codetouch app.yaml code app.yaml

4. Paste the following multi-resource manifest into app.yaml:

Copy codeapiVersion: apps/v1 kind: Deployment metadata: name: tetris spec: replicas: 2 selector: matchLabels: app: tetris template: metadata: labels: app: tetris spec: containers: - name: tetris image: {{acr_name}}/app/tetris:1.0 # image in ACR resources: # include resources for better scheduling requests: cpu: 100m memory: 128Mi limits: cpu: 250m memory: 256Mi ports: - containerPort: 8000 --- apiVersion: v1 kind: Service metadata: name: tetris spec: ports: - port: 80 # Access on service port 80 protocol: TCP targetPort: 8000 selector: app: tetris type: LoadBalancer # External Access via load balancer service

Read through the file to see how the resources are configured. The manifest file is comprised of a Deployment, and Service. The Deployment creates two replicas of the application and pulls the image from ACR. The Service creates a load balancer with an external IP for accessing the Pods created by the Deployment. The Service is available on port 80, the standard HTTP port.

5. Save and close the file by pressing the following key combinations:

- Windows and Linux: ctrl+s ctrl+q

- mac OSX: cmd+s cmd+q (click the x to close the file if your browser overrides cmd+q)

6. Replace the placeholders in the manifest with the required values:

Copy code# Store ACR DNS name

acr_name=$(az acr list --query [0].loginServer -o tsv)

# Replace the placeholders with the values usind stream editor (sed)

sed -i "s/{{acr_name}}/$acr_name/" app.yamlYou can optionally confirm that the placeholder has been substituted with its real value.

7. Create the resources in the manifest file to deploy the application:

Copy codekubectl create -f app.yaml

The load balancer service will configure an Azure Load Balancer. There is some delay before the external IP of the load balancer is ready to allow you to access the application from your browser.

8. Watch the service until the EXTERNAL-IP appears:

Copy codewatch kubectl get service tetris

9. Copy the EXTERNAL-IP and navigate to it in a new browser tab:

The application is now deployed!

11. In the Cloud Shell, press ctrl+c to stop watching the service.

Summary

In this Lab Step, you deployed an application on AKS using a container image stored in ACR. You exposed the application to external clients by using a load balancer service. You could also use a Kubernetes ingress and the HTTP application routing AKS addon to allow external clients access.

Monitoring Applications running in AKS

Introduction

AKS provides some additional facilities for monitoring applications running in AKS. The standard methods that work with any Kubernetes cluster such as kubectl logs and kubectl top, also work in AKS. This Lab Step focuses on the additional capabilities AKS offers. The Lab's Kubernetes cluster has container monitoring enabled. This enables everything above the standard metrics collection such as node agent CPU and memory utilization. Recall the OMS (Log Analytics) agent Pods running in the cluster, which are responsible for shipping logs and metrics to Log Analytics. The views in this Lab Step use the data stored in Log Analytics.

Instructions

1. In the Azure Portal, click Monitoring > Insights in the left navigation menu of the ca-labs-cluster blade:

2. Observe the charts displayed under the Cluster tab:

This view gives you a high-level overview of the cluster with metrics streaming in every minute. The node pool is currently oversized based on the Node CPU Utilization and Node memory utilization percentages. If you had such low utilization in practice you could consider downsizing the cluster and potentially leveraging ACI for bursts of work. The Active pod count in the image above is 22, which includes all of the Pods in the kube-system namespace. All Pods are Running so the cluster appears to be in good shape.

3. Click the Nodes tab and expand one of the nodes in the table to see Pods running on the node:

The CPU usage is expressed as the 95th percentile, meaning the CPU is being used less than or equal to the stated amount 95% of the time. The utilization is expressed in percentage (95TH %) and in terms of CPU cores (95TH) with units of millicores. You can adjust the statistic and the metric using fields just above the table.

4. Find and select one of the tetris containers (indicated by a blue square icon) open a blade containing details about the container:

You can select any item to get more details about it.

5. In the tetris blade click View in analytics > View container logs:

This takes you to the Log Analytics view where a query is executed to gather logs from the container:

The table below the query gives all of the logs within the given time range. Depending on the container you chose, you might be able to find the request you sent to load the application:

![]()

6. In the left-side panel enter events in the Tables search bar and click the eye icon to the right of ContainerInsights > KubeEvents to view events Kubernetes has logged.

This automatically populates a query:

And displays the events in the table. You can easily inspect other logs by using the schema panel. If you are familiar with SQL you can be even more efficient at gaining insights by writing your own Log Analytics queries. Note that the query results can be displayed in table format or as a bar chart:

Summary

In this Lab Step, you explored how to monitor an AKS cluster using the logs and metrics streamed to Log Analytics.

If you have time remaining in your Lab session, you can explore some the other tabs in the Insights blade and execute other Log Analytics queries.

No comments:

Post a Comment